This New AI Chip Has 1.2 Trillion Transistors; Officially, This Is World’s Biggest Processor!

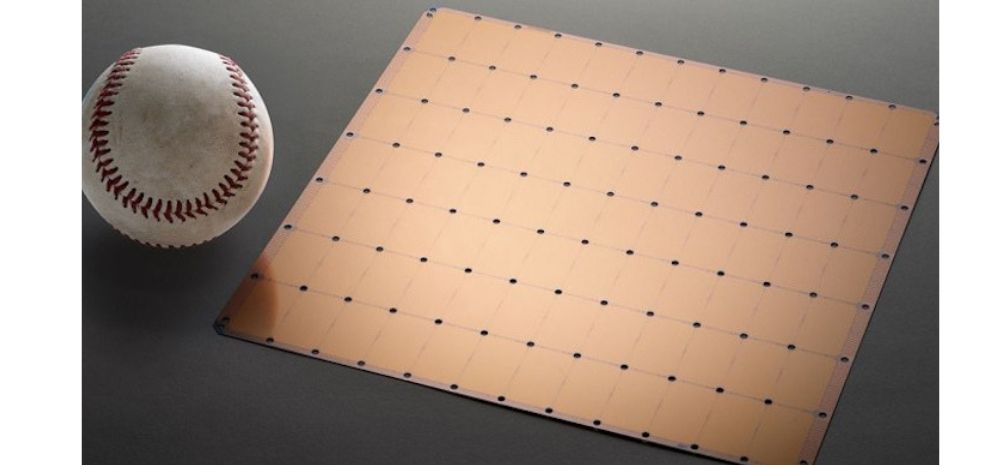

A startup from California Cerebras Systems has built and developed the biggest processor in the world, which consists of 1.2 trillion transistors. This processor is called the Cerebras Wafer Scale Engine, and is being described as the ‘heart of our deep learning system’ by the company.

Cerebras Systems is just a startup and this is a huge feat for them in the world of electronics. This development will definitely speed things up in the field of Artificial Intelligence. We all know that the bigger the processor, faster is the speed of the gadget in which it will be used. And this one has already broken all the records!

Find out the details and the future of the Cerebras Wafer Scale Engine, the world’s largest processor right below!

Cerebras Wafer Scale Engine: World’s Largest Processor

While all the companies in the processor industry try to shrink their transistors, so that more transistors can fit on the processor, Cerebras Systems has instead chosen to go down a different path and built a larger processor.

The Cerebras Wafer Scale Engine is 57 times that of Nvidia Corp.’s V100 data centre graphics card, which is their best one.

A statement by the company has said that the company has developed and manufactured this largest-ever chip to fulfil the ‘computational requirements of AI’. The company states, “The Cerebras Wafer Scale Engine (WSE) is 46,225 millimeters square, contains more than 1.2 trillion transistors, and is entirely optimized for deep learning work.”

Along with that, there are as much as 400,000 cores, which are optimized compute cores.

The memory architecture of the WSE chip guarantee that all of these cores work well and give maximum productivity.

What Is The Future Of The Wafer Scale Engine?

As per reports, Cerebras Systems is not planning on selling this chip, because it is very difficult to connect and cool such a huge piece of silicon. The WSE will instead find its application as a part of a new server, and will further be installed in data centers.

The company states that WSE ‘reduces the cost of curiosity, accelerating the arrival of the new ideas and techniques that will usher forth tomorrow’s AI.’

The development of this chip will set the ball rolling at a faster motion that before, and we can expect the advancement in AI in leaps and bounds. It will help the practitioners of deep learning to test out their theories and hypotheses at a faster rate.

Comments are closed, but trackbacks and pingbacks are open.