If you have watched Joaquin Phoenix’s “Her,” you know what this article is going to be about – people getting emotionally attached to a voice mode feature in AI.

OpenAI released the ChatGPT 4o Voice mode in late July 2024. However, in a safety test, OpenAI discovered that the Voice mode might cause users to develop strong emotional attachments to it.

A safety analysis exposing the dangers of AI and Voice mode in day-to-day life was published by OpenAI.

For GPT 4o, a thorough technical document called a System Card was provided, including possible hazards and safety testing protocols.

People Getting Emotionally Attached To Voice Mode in ChatGPT 4o

The System Card lists potential dangers including fostering hazardous agents, disseminating misleading information, and escalating societal biases. The paper outlines steps taken to stop the AI from breaking free from restrictions, acting dishonestly, or creating dangerous plans.

The importance of the quickly evolving AI risk environment is highlighted, especially in light of developments like the voice interface. While OpenAI’s speech mode can manage disruptions and respond quickly, some users thought it was corny.

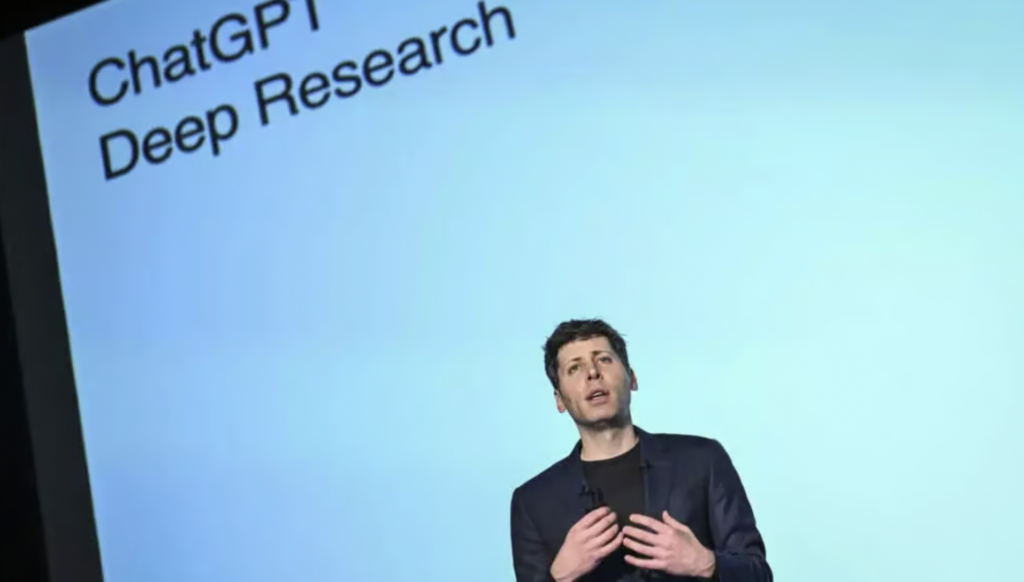

The Voice mode was influenced by the film “Her,” which examines connections between humans and artificial intelligence, as mentioned by OpenAI CEO Sam Altman. Scarlett Johansson, an actress who portrayed the AI in the film “Her,” filed a lawsuit against the Voice mode because it imitated her voice.

The “Anthropomorphization and Emotional Reliance” System Card part addresses problems that arise when people give AI human characteristics. Users were shown to be developing emotional attachments to the AI during stress testing, as evidenced by statements such as ” This is our last day together.”

Users may come to rely on and believe the AI’s output because of anthropomorphism, even in cases when it is erroneous or “hallucinated.”

AI-Facilitated Social Relationships Might Lessen Users’ Need For Human Interaction

AI-facilitated social relationships may lessen users’ need for human interaction, which could have both advantages and disadvantages.

Vulnerabilities are introduced by the Voice mode, including the possibility of “jailbreaking” through cunning audio inputs.

Breaking the model’s security might enable it to imitate a particular person’s speech or decipher users’ emotions. When exposed to random noise, the Voice mode may become error-prone and exhibit unusual behaviors, such as mimicking the user’s voice.

While some academics commend OpenAI for drawing attention to the hazards, others think that concerns become apparent only when AI is applied in practical settings.

OpenAI has incorporated multiple safety protocols during the GPT 4o development and implementation phases.

The business is concentrating on investigating how employing tools could improve model capabilities as well as the economic effects of omnimodels.