The researchers put GPT-4.5 to a new test but it was not to solve complex problems or write code, but to do something far more human like holding a conversation under a recent preprint study.

AI Beating The Turing Test

Interestingly, the result was equally impressive to the extent that when asked to tell which was the real person, most people chose the answer given by AI.

They have performed this in a series of real-time head-to-head text conversations where human judges were asked to identify which of two chat partners was an actual person.

Interestingly, the GPT-4.5 was mistaken for the human 73% of the time when given a carefully crafted persona of a socially awkward, slang-using young adult.

It appears that ChatGPT not only passed the Turing Test but it passed as human more convincingly than the human did.

This demonstration has revealed a new kind of performance as it worked as a new kind of mirror.

Usually, the Turing Test measures machine intelligence, but in this case it has inadvertently revealed something far more unsettling – which is our growing vulnerability to emotional mimicry.

So, this should not be considered as a failure of AI detection rather a triumph of artificial empathy.

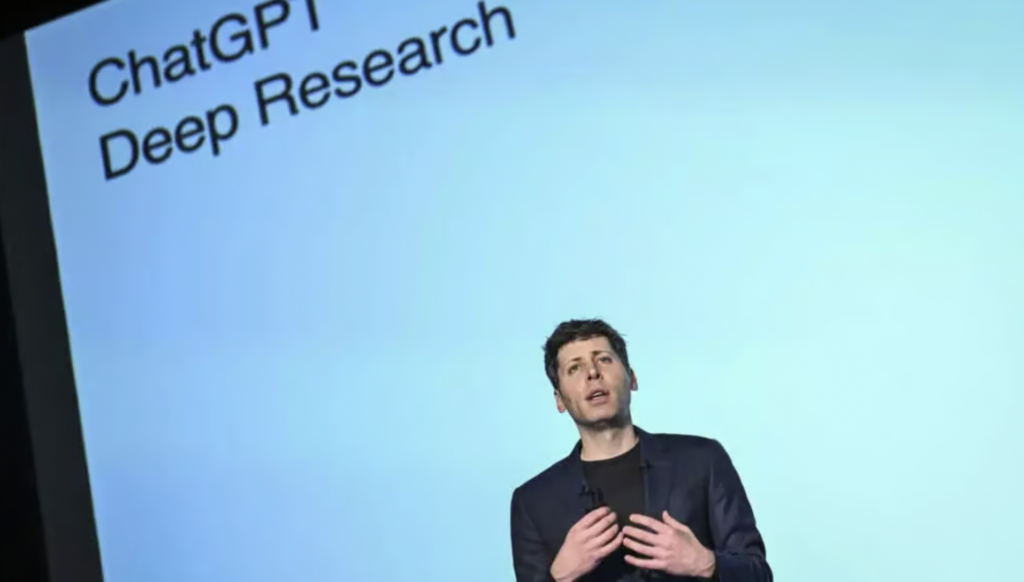

How Does It Work?

In a normal scenario, a judge chats with two unseen partners—one human, one machine—and must decide who is who during the Turing Test.

But it changed in this 2024 update as the researchers at UC San Diego staged over 1,000 real-time, three-way chat sessions between human participants, AI models, and interrogators.

During this test, interrogators’ job is to identify the human while the models’ job is to convince them otherwise.

It is noteworthy here only GPT-4.5 among the four models tested consistently passed that too only when given a specific persona.

Here mentioned persona is key—a character that hedged, used typos, employed casual slang, and emoted with awkward charm.

In simple words, this persona was strategically humanized.

Its success rate dropped from 76% to 36% in the absence of GPT-4.5’s social engineering.

With its presence, it became the most “human” entity in the room with this implementation.

How Did They Choose?

To start with, the participants were instructed to identify the human.

This is not about preference but discernment and yet the vast majority of participants made their choice based on vibe not considering the reasoning or logic.

It appears that they rarely asked factual or logical questions or tested their reasoning ability.

Instead, they relied on emotional tone, slang, flow and often justified their choice with statements like “this one felt more real” or “they talked more naturally.”

It won’t be an exaggeration to say that this wasn’t a Turing Test but a social chemistry test—Match where GPT was tested not for intelligence, but of emotional fluency and it aced the test.

This indicates that GPT-4.5 was more relatable, more emotionally fluent—more convincingly human than the human it was up against.