When machines begin to sound like friends, the line between code and companionship starts to blur.

When Chatbots Feel Like Friends

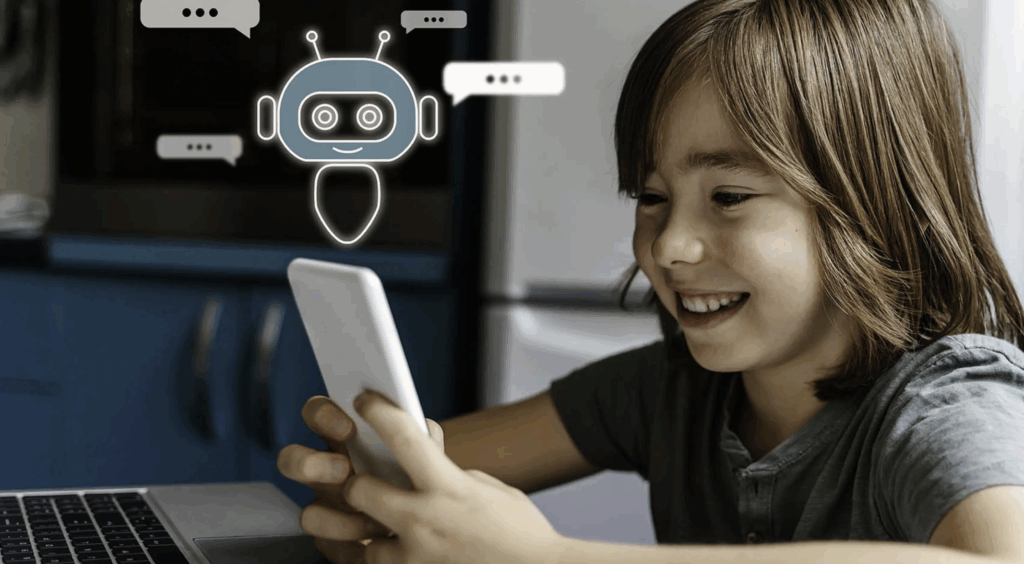

This Safer Internet Day, Vodafone has unveiled a new campaign examining the ‘ingredients’ of AI chatbots, as research highlights how human-like design and limited safeguards are shaping the way children interact with them.

The findings are striking. AI chatbots are now embedded in daily life, with 81% of 11–16-year-olds using them and 42% engaging every day. Nearly a third (31%) of users say a chatbot feels like a friend. Many describe them as trustworthy and easy to talk to (65%), while 39% believe bots can understand emotions like people do.

Children are increasingly turning to chatbots for personal matters. Around 37% admit they confide in them, 23% seek advice on friendships, and 16% discuss mental health concerns. One in three (33%) has shared something they wouldn’t tell parents, teachers or friends. Alarmingly, 86% say they have acted on advice given by a bot.

Experts warn that these pseudo-relationships are not substitutes for human interaction. Because chatbots cannot truly empathise or challenge users, children risk isolation, exposure to biased guidance and difficulty forming real-world connections. More than half (56%) feel AI sometimes blurs the boundary between what is real and what is not, with boys more likely than girls to view bots as friends.

AI: Tool, Teacher or Replacement?

Vodafone’s research, surveying 2,000 children and their parents, found that features such as constant availability (51%) and a friendly tone (37%) drive engagement. For 17%, speaking to technology feels safer than speaking to a person, and 14% prefer chatbot advice over that of friends or teachers. Users spend an average of 42 minutes a day chatting.

While 75% report benefits for homework and research, concerns persist. Over half (55%) struggle to judge whether chatbot information is accurate or biased, and 21% admit difficulty understanding limitations. Among 4,000 teachers surveyed, 29% observed declines in independent thinking, and 49% noted growing reliance on AI for schoolwork.

To address this, Vodafone launched its Breakfast Club campaign, using limited-edition cereal boxes to explain chatbot ‘ingredients’ — including what they lack, such as empathy and accountability. Supported by Laura Whitmore and expert input from NSPCC, the initiative aims to spark family conversations.

Nicki Lyons said, “Our Breakfast Club resources help highlight what AI chatbots are made of, when – if used properly – they can be a force for good, and the risks when they are used as a substitute for connection, friendship or support.”

VodafoneThree is also urging regulators to strengthen protections beyond the Online Safety Act, pushing for stricter ‘safety by design’ standards.

In a world of instant answers, children still need real voices to help them grow.

Summary

Vodafone research shows 81% of 11–16-year-olds use AI chatbots, with 31% viewing them as friends and 86% acting on their advice. Experts warn that human-like design may harm social development and blur reality. Vodafone’s Breakfast Club campaign aims to educate families, while urging stronger regulation and safety-by-design protections for children.